|

Table of Contents:

|

||||

Editor: Michael Orr

Technical Editor: Heather Stern

Senior Contributing Editor: Jim Dennis

Contributing Editors: Ben Okopnik, Dan Wilder, Don Marti

|

TWDT 1 (gzipped text file) TWDT 2 (HTML file) are files containing the entire issue: one in text format, one in HTML. They are provided strictly as a way to save the contents as one file for later printing in the format of your choice; there is no guarantee of working links in the HTML version. | |||

![[tm]](../gx/tm.gif) ,

http://www.linuxgazette.com/ ,

http://www.linuxgazette.com/This page maintained by the Editor of Linux Gazette, gazette@ssc.com

Copyright © 1996-2001 Specialized Systems Consultants, Inc. | |||

The Mailbag

The Mailbag

Send tech-support questions, answers and article ideas to The Answer Gang <linux-questions-only@ssc.com>. Other mail (including questions or comments about the Gazette itself) should go to <gazette@ssc.com>. All material sent to either of these addresses will be considered for publication in the next issue. Please send answers to the original querent too, so that s/he can get the answer without waiting for the next issue.

Unanswered questions might appear here. Questions with answers--or answers only--appear in The Answer Gang, 2-Cent Tips, or here, depending on their content. There is no guarantee that questions will ever be answered, especially if not related to Linux.

Before asking a question, please check the Linux Gazette FAQ to see if it has been answered there.

Compact Flash Card

Compact Flash CardI have a problem in adding a new file system in to a device called mediaEngine. It is a product of brightstareng.com There is slot for a Compact Flash. I bought a compact flash and added it, but thenit does not recognise the card. it says there isan i/o error. the mke2fs does not work properly. I am not able to mount even.

pleaese help me regarding this.

thanking you inadvance

kamalakanth

Booting x86 from flash using initrd as root device

Booting x86 from flash using initrd as root deviceHello Everybody,

I am trying to develop a x86 target which boots from Flash. I have taken the BLOB bootloader (taken from LART project based on ARM processor.) and am modifying it for X86. So far I am able to get the Linux kernel booted. But when it comes to the mounting of rootfile system I am stuck. ( The blob downloads the kernel image and initrd image into RAM from Flash/through serial line).

1. I am getting the compressed kernel image in RAM at 0x100000 through serial line.

2. I am getting the compressed ramdisk image(initrd) in RAM at 0x400000 through serial line.

3. The kernel gets uncompressed and boots correctly till the point of displaying the message

RAMDISK: Compressed image found at block 0. and then hangs.

4. After debugging I have found that control comes till gunzip function inlinux/lib/inflate.c but never comes out of the function.

5. The parameters I have set at the begining of setup_arch function in linux/arch/i386/kernel/setup.c are as follows

ORIG_ROOT_DEV = 0x0100

RAMDISK_FLAGS = 0x4000

INITRD_START = 0x400000

INITRD_SIZE = 0xd4800 (size of compressed ram disk image)

LOADER_TYPE = 1

Has anyone faced such problem before? If so what needs to be done?

Are the values for the parameters mentioned above correct? Are they the only information to be mentioned to the kernel for locating and uncompressing the RAMDISK image and make it boot?

Is there any bootloader readily available for x86 platform for booting from Flash also with serial downloading facility?

Please help

With Kind Regards,

manohar

KEEP UP THE GOOD WORK !!!!

KEEP UP THE GOOD WORK !!!!Remember when you said :

WinPrinter Work-around

From harmbehrens on Sat, 01 May 1999

Hello, is there any work-around to get a gdi printer (Star Wintype 4000) to work with Linux :-?

Harm

. . .

I presume that these are NOT what you wanted to hear. However, there is no way that I know of to support a Winprinter without running drivers that are native to MS Windows (and its GDI --- graphics device interface --- APIs).

What about using one of the Windows emulators like WINE (http://www.winehq.org) ? -- Mike

Actually, a very few GDI- aka "winprinters" are supported - some Lexmarks. I saw them first as debian packages but I'm pretty sure Linuxprinting.org has more details. The notes said that rumor has it some other GDI printers work with those, but it's a bit of effort and luck. Rather like winmodems... -- Heather

I Agree 100% about the GDI printers! (I refer to them as Goll Damn M$ Imitators). Unfortunately, until the recent developments on the federal court case M$ has not been checked at all. Some parts of what they, as a Monopoly have done has been good for the IT commmunity, while other things have really crapped on people WHO THINK!

Thank GOD !!!!! Linux is around to be a thorn in their side ...

THe other incidious thing printer manufacs have done is stop building as many Postscript printers. They are all using the friggin Windows drivers to do everything ... . So I have begun to play the stinkin game by mounting SMALL 468-DX266 16 Megs Linux Print Servers running SAMBA. THe newest ver of SAMBA even does PDC correctly.

Linux has been my savior in many times of need! I have been able to use thos stinking 386 boat anchors as LRP routers or firewalls, the small 90 Pent to 166 Pent are becoming LTSP Linux Xwindow Terminals. I still have stinking problems from time to time, yet I DON'T HAVE TO REBOOT EVERY 24 [&^%$!#]* days PER TECHNET(M$ answer to internal pay support) FOR NT SERVERS! I have Linux servers with uptimes of 200 + days IN PRODUCTION!

I'll sign off , enough rambling and ranting for one email. I just found your stuff through linuxdoc.org, and I thought you HIT THE NAIL ON THE HEAD!

KEEP UP THE GOOD WORK!

The real question is, is it worth spending 50 bucks instead of 200 or more, when you're going to spend a bunch of time making sure your driver is wired up right? I have an unfair advantage, since I live where these things are sold readily, but I get to say no ... I can spend about 80 bucks on a color printer which is actually listed as fully supported.

You may be able to mail order a good printer at a decent price. On the other hand, if exploring how these things all tie together under the hood is fun, then you can improve the state of the art for such printers, and maybe some fine day folks will be able to easily use "the GDI printer that came with their MS windows" without having to fight with it like this.

-- Heather

not happy

not happyOn Wed, Jul 04, 2001 at 09:26:35PM +1000, 32009318 wrote:

i know how annoyed you guys at the linux gazette must feel as i have been reading many back issues of the gazette and finding lots of cool tips and trick but the question always comes up

CAN YOU SOLVE WINDOWS PROBLEMS people just dont get it youre called the linux gazette and you help with linux questions

Unfortunately, we also have no idea where they find the reference to us that gives them the impression we do anything in Windows... more's the pity, since we can't beg the webmaster to take the link down.

They can certainly trip on us in search engines though, especially the worse ones. I can imagine someone frustrated with some Windows networking matter tripping over our many notes about Samba, since the point is to link up to some MSwin boxes... -- Heather

(you do it very well i should add)

Oh thank you, we like to hear that! -- Heather

The problem is, most people who ask Windows questions found the submission address via a search engine, and have no idea the address belongs to Linux Gazette or even know what Linux Gazette is. Perhaps we should have used an address like linux-questions-only, but we can't stop honoring the old addresses since they are published in so many back issues and some are on CD-ROMs that we can't change. -- Mike

You should clearley state this in youre next issue or be humorous and rename yourself The Not Windows Gazette

![]() They're not the only odd ones out - we've had IRIX, PC-MOS, and other

odd OS users crop up before too.

They're not the only odd ones out - we've had IRIX, PC-MOS, and other

odd OS users crop up before too.

[Ben] Even had someone trying to get root access to their rhubarb, recently...

Funny you should mention a name change though. We have changed the name of the Answer Gang mailing list to "linux-questions-only@ssc.com" -- Heather

Many TAG threads have humorous comments and/or rants about off-topic questions. Heather also tries to guide people in her "Greetings from Heather Stern" blurb about how to ask a good question and how not to ask a bad question. Finally, I have started making fun of off-topic questions on the Back Page of the past few issues. There's now a section on the Back Page called "Not The Answer Gang", as well as "Wackiest Topic of the Month". -- Mike

keep up the good work with linux

Thanks bunches, we're glad you enjoy the read! -- Heather

Blinking Icons

Blinking Icons

I love reading LG every month, especially TAG, and 2cent tips. But the new

blinking question and exclamation icons are somwhat annoying. Any chance of

going back to the old ones?

![]()

thanks for all the good work!

blinking? They're not supposed to blink. In fact, if they are it's been that way for YEARS ... since I first provided .gif files with the ! and ? in them in blue, instead of with those stupid "!" and "?" that nobody could read.

It would mean I accidentally had layers in them. But there's no loop command, so even if you see that briefly it should stop. Is that what you are seeing, or does it continue to blink? Did you change browsers at all recently? If so to which one?

We have been trying to improve browser compatability lately anyway

![]() Thanks

for bringing it up.

Thanks

for bringing it up.

[ turning to my fellow editor ] Mike, did anybody run any scripts against the graphic images recently that I need to know about?

-- Heather

No, we haven't changed those images. I've threatened to change all .gif's to .png or .jpg and change all the links, but haven't done it yet. I've only used blinking text once or twice and that wasn't in the TAG column.

Also, LG is probably the only site in the world that requires NON-ANIMATED logo images from its sponsors. Because I personally have a strong intolerance for unnecessary animations.

-- Mike

The "blinking Icons" are actually three layer gifs with one backround (white bubble) and 0ms display time and two layers (question mark and shadow) with 100ms display time in combine mode (will be overlayed over the previous one).

opera5.0/Linux displays them on a strange blue background (seems to have a gif problem, also with other gifs not only these little ones).

If you want a scriptable tool (besides gimp) there is a program called pngquant which can do the color (and size) reduction on png files which convert will not do.

-- K.-H.

-- Heather

question

questionEveryone I know is having the same problem. Not only is the Telnet completely outdated, but it simply does not work for people off campus in most areas...

Hint: putty, one of the ssh clients for Windows that we keep pushing, is also a better "normal telnet" client for Windows. -- Heather

And last weekend I discovered that the public library in Halifax, Nova Scotia, has putty as the default terminal emulator on their public Windows terminals!!! Way to go, library! And switching between telnet and ssh in putty is easy: just choose one radio button or the other.

Unkudos to the Second Cup cybercafe in Halifax. The Start menu has a special item for Telnet but when you choose it, you get a dialog saying, "this operation is prohibited by the system administrator". -- Mike

you call yourself the "answer guy" and all you know how to be is a sarcastic little bastard..

If you saw the hundreds of messages we receive every month and the high percentage of them that are questions totally outside our scope, you might become a sarcastic little bastard too. -- Mike

We all have our good days, and our sarcastic days, and the point of our column is that we're real people, answering in just the same way we would if you asked us at the mall while we were buying a box of the latest Linux distro.

A particular thing to note is very few of us are Windows people. Most of us not at all - and others have had bad real-life experiences with the OS you presently favor. So not everyone will be cheer and light towards things we consider to be poor sysadmin practices. Especially things which would be poor sysadmin practices even in an all Microsoft corporate netcenter.

At least two of us have enough WIndows experience to attempt answers in that direction -- but this is the LINUX Gazette. If your questions are not about LINUX at least partially, then we really didn't want to hear from you... so you should be glad you got any answer at all...

... and curiously enough, some Windows people have gotten real answers for themselves in Linux documents once we point them the right way.

The practice of running an open service needs more care than just "clicking Yes" on the NT service daemon, and some of these services only make sense in a locked up environment.

Many but not all of The Answer Gang feel that telnet is now one such service. By mailing us you ask our opinion, and that opinion is real, so we say it. You do not have to like our opinions. We don't have to like yours. We can still share computing power.

A free society is one where it is safe to be unpopular.

you make yourself seem very unintelligent to me and have you ever heard of "If you can't say anything nice.don't say it at all?"

As if calling us a bastard is nice, yet you bothered to say that. You know absolutely nothing of the parentage of any member of this group unless you read our Bios (http://www.linuxgazette.com/issue67/tag/bios.html) ...

... and we know that you have not because you only speak to one person.

Parentage (or lack of one) doesn't necessarily lead to being technologically clued, but having technical or scientifically inclined parents seems to help.

Apparently not. The question Nellie asked was a valid one. Too bad she does now all the mumbo jumbo computer jargon...maybe the next time you should self proclaim yourself as the "Smart-ass Guy" instead. Miriam Brown

I believe "curmudgeon" is the term you're looking for, and yes, Jim Dennis labels himself to be one. Proudly.

Interestingly, a querent this month has asked where to read up on the techy mumbo jumbo words so they can learn more about Linux and speak more freely with their geeky friends. Our answers to that will actually be useful to many readers.

Poor english is tolerated to a fair degree on the querent's side. (that is, if we can't figure out what querents are saying, it's hard to even try to answer them). Each Answerer's personal style is mostly kept - so some are cheerful, some are grumpy.

PS...Don't answer guys usually use spell check?

It is not required to know how to use a spell checker, in order to know how to rebuild a kernel. You have delusions of us being some glossy print magazine like Linux Journal. (ps. Our host, SSC publishes that. It does get spell checked and all those nice things. Go subscribe to it if you like. End of cheap plug.)

Nobody here is paid a dime for working on the Gazette specifically, so our editorial time is better spent on link checking and finding correct answers... er, well, we try... than spellos. We have occasionally had people complain about this point very clearly and loudly, but there is not room in the publishing schedule for them to squish themselves in between and still meet deadline. We suggested that they make their own site carry the past issue after fixes (our copyright allows this) but the people have then always backed down and gone away. We'd almost certainly merge their repairs if they made some, but nobody has taken up on that offer yet. Oh well. Let us know if you want to start the Excellent Speller's Site - we'll cheer for you. Maybe some other docs in the Linux Documentation Project (LDP) could stand a typo scrub too.

Oddly, many people read LG religiously because it's written in person to person mode and doesn't spray gloss all over the articles and columns that way. You will get ecstatic excitement at successes and growling at bad ways to do things and every emotion in between.

No, many of the querents don't spell check either, but we still answer them.

I hope you enjoyed your time flaming, but we have people asking questions about Linux to get back to.

"Those were the days"

"Those were the days"I have this dream of contributing something wonderfully useful to the Gazette, but it ain't going to happen today. . .

No, I noticed the ravings on The Back Page of issue 67, and had to send you the lyrics of "Those were the days":

. . .

(It looks like the rest was pretty good up til the last verse)

. . .

Didn't need no welfare state

Everybody pulled his weight

Gee, our old LaSalle ran great

Those were the days.

But better yet, is the Simpsonized version --

For the way the BeeGees played

Films that John Travolta made

Guessing how much Elvis weighed

Those were the days

And you knew where you were then

watching shows like Gentile Ben

Mister we could use a man like Sheriff Lobo again

Disco Duck and Fleetwood Mac

Coming out of my eight-track

Micheal Jackson still was black,

Those were the days

Maybe next time I write in, I'll have something more useful.

-- Pete Nelson

Thanks, Pete, glad we could amuse. I think we need a linux version, or maybe a BSD one... -- Heather

RE: Linux Gazette Kernel Compile Article.

RE: Linux Gazette Kernel Compile Article.Hi,

First i must commend you on providing a service to all the Linux users out there trying to get started on rolling their own kernel. I would just like to point out a few things i found somewhat confusing about your article.

You compiled in "math emulation" support even though you have a CPU with a built in maths co-processor, in this case the math emulation will never be used and essentially wastes memory. secondly, you selected SMP support for your uniprocessor (UP) system. This on some occasion can cause problems with specific UP motherboards causing them not to boot or certain kernel modules not loading, in addition to being slower and taking up more memory than a non-SMP kernel. Also as you might want to break apart your build procedure by using double ampersands. i.e. "make dep && make bzImage && make modules && make modules install ....."

That aside, it's great that you're willing to share your experiences and knowledge with the rest of the Linux community.

Regards,

Zwane

Parrallel processing

Parrallel processingI would like to contact the uuthor of the above article which appeared in the april 2001 edition of your magazine .

The author's name in all our articles is a hyperlink to his e-mail address. Rahul Joshi's is jurahul@hotmail.com -- Mike

response to: Yet Another Helpful Email

response to: Yet Another Helpful EmailI was pleased to see a letter by Benjamin D Smith that compares learning Windows to learning Unix by drawing a mental graph of the respective learning curves, because I thought it would set straight a lot of people who misuse the term "steep learning curve."

But then Smith went ahead and misused the term himself, in a way wholly inconsistent with the picture he drew.

To set the record straight, allow me to explain what a learning curve is, and in particular what a steep one is all about.

The learning curve is a graph of productivity versus time. As time passes, you learn stuff and your productivity increases (except in weird cases).

Windows has a steep learning curve. You start out useless, but with just a little instruction and messing around, you're already writing and printing documents. The curve rises quickly.

Unix has a very shallow learning curve. You start out useless, and after a day of study, you can still do just a little bit. After another day, you can do a little bit more. It may be weeks before you're as productive as a Windows user is after an hour.

Smith's point, to recall, was that the Windows learning curve, while steep, reaches a saturation point and levels off. The Unix curve, on the other hand, keeps rising gradually almost without bound. In time, it overtakes the Windows curve.

-- Bryan Henderson

anser guy

anser guyAre you still the answer guy, and do you still answer questions? If so, I have one that's been bugging me for a year now. Just let me know,

Thanks!

It's an Answer Gang now. Jim Dennis is still one of us.

We answer some of the hundred of linux questions we get every month. Questions which are not about Linux get laughed about, but have a much lower chance of ever geeteting an answer. They might be answered in a linux specific way.

So if you've a linux question, send it our way

![]()

Mistake

MistakeI just look at your issue 41 (I know that is not really recent ...) but in the article of Christopher Lopes which is talking about CUP, there is a mistake...

I tested it and I see that it didn't walk correctly for all the cases. In fact it is necessary to put a greater priority to the operator ' - ' if not, we have 8-6+9 = -7 because your parsor realizes initially (6+9 = 15) and after (8-15= -7). To solve this problem it is enough to create a state between expr and factor which will represent the fact that the operator - has priority than it +.

Cordially.

Xavier Prat. MIAIF2.

See attached misc/mailbag/issue41-fix.CUP.txt

An lgbase question

An lgbase questionI want to install all of the newest Linux Gazette issues on one of our Linux machines at work. Sounds easy enough.

Consider that I have approximately 12 month's worth of Linux Gazette issues AND each lgbase that I download with each issue. Is the lgbase file cumulative? In other words, can I install the latest and greatest lgbase, and then install (you know -- copy them to the LDP/LG directory tree) all of the Linux Gazette issues.

Yes. There's only one lg-base.tar.gz, which contains shared files for all the issues. So you always want the latest lg-base.tar.gz.

However, once you've installed it, you don't have to download it again every month. Instead, you can download the much smaller lg-base-new.tar.gz, which contains only the files that are new or changed since the previous issue. But if you miss a month, you'll need to download the full lg-base.tar.gz again to get all the accumulated changes.

Always untar lg-*.tar.gz files in the same directory each month. They will unpack into a subdirectory lg/ with everything in the correct location.

Thanks Mike,

You've saved me a lot of time. The system (SuSE 7.0 distribution) had an up-to-date base up until March last year. Your answer saved me lots of untarring operations. And yes, I do put the stuff in the same directory each month on my home system.

Thanks for our fine magazine, and again keep up the good work (all of you),

Chris G.

About which list is which

About which list is which[Resent because Majordomo thought it was a command. You have to watch that word s-u-b-s-c-r-i-b-e near the top of messages. -- Mike]

----- Forwarded ------

Hi Heather,

Sorry, just another dumb newbie question. I have recently signed up to the tag-admin list but not the tag list. I want to be subbed to the Tag list and as a result I have just tried to subscribe to tag by sending the following to majordomo@ssc.com:

subscribe tag hylton@global.co.za

Given the pattern for the other one, I'm guessing that sending the word

subscribe

to the address

tag-request@ssc.com ...should work too.

I, as yet, haven't recd any notifications that a new Linux Gazette has been added to the files area.

For that, you don't want tag nor tag-admin, you want to send a note to lg-announce-request@ssc.com and subscribe to lg-announce. That doesn't say much except that the gazette is posted.

What you will find here on tag-admin are precursor discussions; talk about what should or shouldn't get published, need to tweak deadlines, and some other things that are either water-cooler talk or "infrastucture" matters.

Do the announcements of the new Linux Gazettes come out on the TAG-Admin list or only on Tag?

Neither.

If you join the TAG list, you will be inundated with a large number of newbie and occasional non-newbie computing questions, not all linux-related... and a certain amount of spam that slips the filters, not all of it in English... and a certain number of utterly dumb questions with no relation at all to computing (apparently a side effect of the word "homework" being used so often here). I'd dare say most of the spam is not in English. Since we sometimes get linux queries in non-English languages we can't just chop them off by character set from the list server, but they're easy to spot and delete.

You will also see the answers flow by from members of the Gang, and efforts to correct each other. If you only visit the magazine once a month, you see less answers, some of them get posted in 2c Tips, and they have been cleaned up for readability.

So I can see reasons why someone who doesn't feel like answering questions might want to join the TAG list and lurk, but I'm not sure which of these you wanted.

Puzzled.

Hylton

Thank you Heather,

You as a member of TAG have certainly answered my questions.

![]()

There has been a message sent to majordomo@ssc.com to unsub/scribe from both the TAG and TAG-Admin lists as I do not want to receive idle watercooler chat as I cannot reply immediately, in a watercooler fashion, due to my countries telecoms monopolistic provider.

ob TagAdmin: if Mylton has no objection, this message will go in the mailbag this month.

Hylton has no objection so please FW to the necessary people provided some idea is given of how to ascertain when a new Linux Gazette is published.

Send an email, with the subject containing a magic word which mailing list software enjoys.... please take the slash out though... my sysadmin warns me if I utter this word in too short a mail, the mail goes to majordomo's owner and not to you.

subject: sub/scribe to: lg-announce-request@ssc.com (body text) sub/scribe -- (optionally your sig file)

...mentioning it in the body text should be unnecessary. That -- keeps it from trying your sig as a command too.

The result will be your membership on a list which sees mail about once a month, saying when the Gazette is posted.

Because you are in another country you might want to look at the Gazette Mirrors listing and find a site which is closer to you. Your bandwidth cost might be the same but hopefully your download time won't cost as much. http://www.linuxgazette.com/mirrors.html

Thx for your permission to publish

![]()

Re: email distribution of the Gazette?

Re: email distribution of the Gazette?Please direct me towards a place where I can sign up to receive the Linux Gazette on a monthly or weekly basis when they become available.

I would like this to be a free service.

You don't "receive" Linux Gazette. You read it on the web or download the FTP files. LG is published monthly on the first of the month, although occasionally we have mid-month extra issues. -- Mike

We do have an announce list, though. Write to lg-announce-request@ssc.com

I suppose you could use the Netmind service (http://mindit.netmind.com) if

you don't like ours

![]()

Sending large files via email, regularly, is really, really-really, an incredibly bad idea. Just come get it via ftp when it's ready! Our ftp site is free: ftp://ftp.ssc.com/pub/lg

Heather,

Thank you for the response regarding my query.

May I suggest that the service of email distribution of the gazettes be investigated and provided to those people who sign up for them.

Please read our FAQ: we have considered it, and the answer is No.

Sending huge email attachments around is an undesirable burden on our own mail servers as well as major MX relay points;

I personally have made note of the ftp site but here in South Africa I can only afford a dial-up connection the site has therefore been added into a list to visit in the future. The problem comes in when I want to know if there are any new tutorials on the ftp space since my last visit. It would therefore be much more handy for me, and possibly others, if they were allowed to request and start receiving the gazettes via email.

You may subscribe to the announce list. When you get the announcement, visit the FTP site... or a local mirror.

Not everyone needs to receive it, just the ppl signed up to the distribution list.

Please?

Please use the internet's resources wisely. The whole thing is clogged up enough, without help from us.

Hi Heather,

OK, OK, I give up. It was just a suggestion.

The Internet is so slow already what with video clips going via email that I do not feel anything in using a little of it to increase my knowledge.

I have signed up to the announcement list and will use a mirror closest to me.

Is it possibly possible to use Linux wget feature to retrieve all the bulletins if I so wanted?

Hylton

Yes! That's the spirit! -- Heather

|

Contents: |

Submitters, send your News Bytes items in PLAIN TEXT format. Other formats may be rejected without reading. You have been warned! A one- or two-paragraph summary plus URL gets you a better announcement than an entire press release.

August 2001 Linux Journal

August 2001 Linux Journal

The August issue of Linux Journal is on newsstands now. This issue focuses on Platforms. Click here to view the table of contents, or here to subscribe.

All articles through December 1999 are available for

public reading at

http://www.linuxjournal.com/lj-issues/mags.html.

Recent articles are available on-line for subscribers only at

http://interactive.linuxjournal.com/.

July/August 2001 Embedded Linux Journal

July/August 2001 Embedded Linux Journal

The July/August issue of Embedded Linux Journal has been mailed to subscribers. This issue focuses on Platforms. Click here to view the table of contents. Articles on-line this month include Embedded Linux on the PowerPC, Will It Fly? Working toward Embedded Linux Standards: theELC's Unified Specification Plan, Boa: an Embedded Web Server, and more.

US residents can subscribe to ELJ for free; just

click here.

Paid subscriptions outside the US are also available; click on the above link

for more information.

SuSE

SuSE

SuSE Linux Enterprise Server for the 32-bit architecture by Intel was awarded the status "Generally Available for mySAP.com". This certification now enables companies all over the world to utilise mySAP.com on SuSE Linux Enterprise Server and take advantage of services and support from SAP AG. For further information see http://www.sap.com/linux/

SuSE Linux, presented a new Linux solution for professional deployment at the LinuxTag expo in Stuttgart, Germany. The "SuSE Linux Firewall on CD" offers protection for Internet-linked companies' mission-critical data and IT infrastructure. SuSE Linux Firewall is an application level gateway combining high security standards of a hardware solution with the flexibility of a software firewall. Instead of being installed on the hard disk, SuSE Linux Firewall is so-called a live system that enables the operating system to be booted directly from a read-only CD-ROM. Since it is impossible to manipulate the firewall software on CD-ROM, the live system constitutes a security gain. The configuration files for the firewall, such as the ipchains packet filter settings, are placed on a write-protected configuration floppy.

Upcoming conferences and events

Upcoming conferences and events

Listings courtesy Linux Journal. See LJ's Events page for the latest goings-on.

|

O'Reilly Open Source Convention | July 23-27, 2001 San Diego, CA http://conferences.oreilly.com |

|

10th USENIX Security Symposium | August 13-17, 2001 Washington, D.C. http://www.usenix.org/events/sec01/ |

|

HP World 2001 Conference & Expo | August 20-24, 2001 Chicago, OH http://www.hpworld.com |

|

Computerfest | August 25-26, 2001 Dayton, OH http://www.computerfest.com |

|

LinuxWorld Conference & Expo | August 27-30, 2001 San Francisco, CA http://www.linuxworldexpo.com |

|

Red Hat TechWorld Brussels | September 17-18, 2001 Brussels, Belgium http://www.europe.redhat.com/techworld |

|

The O'Reilly Peer-to-Peer Conference | September 17-20, 2001 Washington, DC http://conferences.oreilly.com/p2p/call_fall.html |

|

Linux Lunacy Co-Produced by Linux Journal and Geek Cruises Send a Friend LJ and Enter to Win a Cruise! | October 21-28, 2001 Eastern Caribbean http://www.geekcruises.com |

|

LinuxWorld Conference & Expo | October 30 - November 1, 2001 Frankfurt, Germany http://www.linuxworldexpo.de |

|

5th Annual Linux Showcase & Conference | November 6-10, 2001 Oakland, CA http://www.linuxshowcase.org/ |

|

Strictly e-Business Solutions Expo | November 7-8, 2001 Houston, TX http://www.strictlyebusinessexpo.com |

|

LINUX Business Expo Co-located with COMDEX | November 12-16, 2001 Las Vegas, NV http://www.linuxbusinessexpo.com |

|

15th Systems Administration Conference/LISA 2001 | December 2-7, 2001 San Diego, CA http://www.usenix.org/events/lisa2001 |

Teamware and Celestix to co-operate

Teamware and Celestix to co-operate

Teamware Group, and Celestix Networks GmbH., a supplier of Linux-based server appliances have signed a global agreement according to which Teamware Office-99 for Linux groupware will be distributed and sold with the Celestix " Aries" micro servers. Supporting a wireless standard the new system is the first wireless groupware server offered for small businesses.

PostgreSQL: The Elephant Never Forgets

PostgreSQL: The Elephant Never Forgets

Open Docs Publishing has announced today that they plan to ship their sixth book entitled " PostgreSQL: The Elephant Never Forgets" by the first week of August 2001. This title will include community version of PostgreSQL version 7.1, the PostgreSQL Enterprise Replication Server (eRserver) and the LXP application server. This will offer to companies the security of a replication server, data backup services, disaster recovery, and business continuity solutions previously limited to more costly commercial Relational Database Management System (RDBMS) packages. Who could ask for more? The book will fully document installation procedures, administration techniques, usage, basic programming interfaces, and replication capabilities of PostgreSQL. A complete reference guide will also be included. The book is now online for development review and feedback.

Total Impact's new "briQ" network appliance

Total Impact's new "briQ" network appliance

Total Impact's new network appliance computer, the briQ weighs in at 32 ounces, and has been selected as IBM's first PowerPC Linux Spotlight feature product. As a featured product, the briQ and its benefits are profiled in the June edition of IBM's Microprocessing and PowerPC Linux website, and may be reviewed at the above link, or at Total Impact's website.

LinuxWorld San Francisco to showcase embedded linux

solutions

LinuxWorld San Francisco to showcase embedded linux

solutions

IDG World Expo announced today that the upcoming LinuxWorld Conference & Expo, to be held August 28-30, 2001 at San Francisco's Moscone Convention Center, will feature an Embedded Linux Pavilion to educate attendees on the growing opportunities for Linux in embedded computing. The Pavilion will be sponsored by the Embedded Linux Consortium (ELC), a vendor-neutral trade association dedicated to promoting and implementing the Linux operating system throughout embedded computing.

Linux Links

Linux Links

the Duke of URL has the following which may be of interest:

ONLamp.com have an article on migrating a site from Apache 1.3 to 2.0.

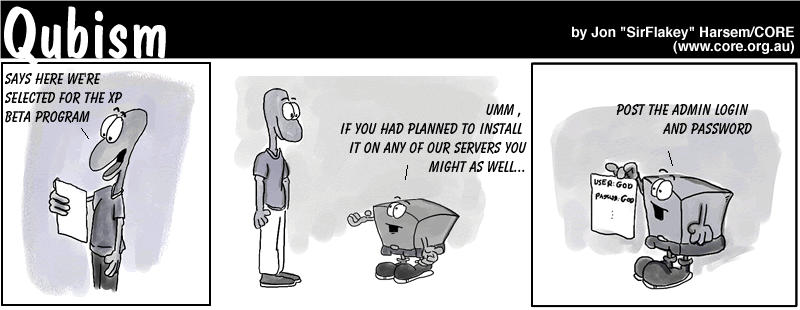

The Wall Street Journal's Personal Technology section has News of an unsettling nature about Windows XP registration, and a discussion of same on Slashdot.

Other Microsoft news indicates that MS may help create .NET for Linux. Microsoft told ComputerWire it would provide technical assistance to Ximian Inc. in its work on the Mono Project, to develop a version of .NET for Linux and Unix using open source development. This story was highlighted on Slashdot

I don't want to seem MS obsessed, or anything ;-), but Linux Weekly News has a critique by Eric Raymond on Microsoft's attacks on so-called "viral licences".

More PostgreSQL news: PostgreSQL Performance Tuning.

An OO approach to the classic how to place eight queens on a chessboard so that none can attack any other problem, in Python.

If you are troubled by spam http://combat.uxn.com/ might be of interest to you.

The TRS-80, the world's first laptop, is still in use.

For the loser^H^H^H^H^Hgeek who has everything, The Joy of Linux has tons of insider trivia. As the Shashdot review begins, "It's 2001. Do you love your operating system?"

Linux NetworX launches ClusterWorX 2.0 cluster

management software

Linux NetworX launches ClusterWorX 2.0 cluster

management software

Linux NetworX, a provider of Linux cluster computing solutions, has announced the launch of its cluster management software ClusterWorX 2.0. The latest version of ClusterWorX includes new features such as secure remote access, thorough system and node monitoring and events, and easy administrator customisation and extensibility.

IBM database news

IBM database news

DocParse HTML to XML/SGML converter

DocParse HTML to XML/SGML converter

Command Prompt, Inc. have released DocParse 0.2.6. DocParse is a tool for technical authors who maintain a large amount of HTML based documentation. DocParse will take any HTML document and convert it into a valid DocBook XML/SGML document. From the XML or SGML format a user can easily convert the document to XHTML, HTML, RTF(MS Word Format) and even print ready postscript. DocParse currently runs on x86 Linux only. They will release for YellowDog Linux (PPC) and MacOS X shortly. There are per seat and subscription based licensing models available for immediate purchase.

StuffIt for Linux and Solaris ships

StuffIt for Linux and Solaris ships

Aladdin Systems, has shipped its award winning compression software, StuffIt for Linux and Sun's Solaris operating systems, making StuffIt available on Windows, Macintosh, Linux, and Solaris platforms. StuffIt for Linux and Solaris can be used to create Zip, StuffIt, Binhex, MacBinary, Uuencode, Unix Compress, self-extracting archives for Windows or Macintosh platforms and it can be used to expand all of the above plus bzip files, gzip, arj, lha, rar, BtoAtext and Mime. StuffIt for Linux can be downloaded at: www.stuffit.com.

Great Bridge announces enhanced PostgreSQL 7.1 package

Great Bridge announces enhanced PostgreSQL 7.1 package

Great Bridge, who today announced the launch of Great Bridge PostgreSQL 7.1, the latest version of the world's most advanced open source database. Great Bridge has packaged the release with a graphical installer, leading database administration tools, professional documentation and an installation and configuration support package to help application developers quickly and easily deploy the power of PostgreSQL in advanced database-driven applications.

[There are at least two other entries about PostgreSQL in this News Bytes column. Can you find them?]

Timesynchro

Timesynchro

Thank you to Frank for summarising the website and features.

Surveyor Webcamsat

Surveyor Webcamsat

Surveyor Corporation, makers of the WebCam 32 webcam software, has just announced a family of webcam servers that addresses the need for increased control over web content. Surveyor's Webcamsat (Web Camera Satellite Network -- Linux-based) family of webcam servers give website operators a new level of control over their streamed audio and video, allowing them the flexibility to control multiple cameras with greater ease.

Kaspersky Labs anti-virus software

Kaspersky Labs anti-virus software

The latest version of Kaspersky Anti-Virus affords customers the opportunity of additionally installing a centralized anti-virus defense for file servers and application servers operating on OpenBSD (version 2.8) and Solaris 8 (for Intel processors) systems, and also for exim e-mail gateways (one of the five most popular e-mail gateways for Unix/Linux). Development of this anti-virus package came about as a result of responding to the needs of mid-size and large corporate customers using Unix, and particularly Linux.

Currently, Kaspersky Anti-Virus can be utilized on Linux, FreeBSD, BSDi, OpenBSD, and Solaris operating systems, and also contains a ready-made solution for integration into sendmail, qmail, postfix, and exim e-mail gateways. The package includes the scanner, daemon and monitor anti-virus technology, and also includes automatic anti-virus database updates via the Internet, an interactive parameter set-up shell and a module for generating and processing statistic reports.

www.kaspersky.com (Russia)

The Answer Gang

The Answer Gang

There is no guarantee that your questions here will ever be answered. Readers at confidential sites must provide permission to publish. However, you can be published anonymously - just let us know!

Greetings from Heather Stern

Greetings from Heather SternHello, everyone, and welcome once more to the world of the Linux Gazette Answer Gang.

The peeve of the month having been Non-Linux questions for a few too many weeks in a row, The Answer Gang has a new address. Tell your friends:

...at ssc.com is now the correct place to mail your questions, and your cool Linux answers. It's our hope this will stop us from getting anything further about pants stains, U.S. history, etc. Cross platform matters with Linux involved are still fine, of course.

For some statistics... there were over 31 answer threads, 25 tips (some were mini threads) and over 600 messages incoming - and that's after I deleted the spam that always leaks through. 200 more messages than last month. I'm pleased to see that the Gang is up to the task.

Now at this point I bow humbly and beg your forgiveness, that, being a working consultant with more clients than usual keeping me busy, I wasn't able to get all of these HTML formatted for you this time. In theory I can put a few as One Big Column but the quality is worse and we drive the search engines crazy enough already. I can definitely assure you that next month's Answer Gang will have tons of juioy answers.

Meanwhile I hope I can mollify you with some of the Linux tools that have been useful or relevant to me during my overload this month.

Mail configuration has been a big ticket item here at Starshine. You may or may not be aware that by the time we go to press, the MAPS Realtime Blackhole List is now a paid service. That means folks who have been depending on the RBL and its companion, the Dialup List, have to pay for the hard work of the MAPS team... and their bandwidth. You can find other sources of blacklisting information, or start enforcing your own policies ... but I would like to make sure and spread the news that they aren't going exclusively to big moneybags - file for hobbyist, non-profit or small site usage and you don't have to pay as much. Maybe nothing. But you do have to let them know if you want to use it, now.

My fellow sysadmins had been seeing this coming for a long time. Many actually prefer to know what sort of things are being blocked or not, anyway. Censorship after all, is the flip side of the same coin. Choosing what's junk TO YOU is one thing, junking stuff you actually need is entirely another. If others depend on you then you have to be much more careful. Plaintext SMTP isn't terribly secure but it's THEIR mail, unless you have some sort of contract with them about it.

So, I've been performing "Sherriff's work" for at least one client for a long while now anyway - just tweaking the filter defenses so that the kind of spam which gets in, stays out next time. There's a fairly new project on Sourceforge called Razor, which aims for anti-spam by signatures, the same way that antivirus scanners check for trojans and so on. I haven't had time to look into it, but I think they're on the right track.

Procmail (my favorite local delivery agent) has this great scoring mechanism; it can help, or it can drive you crazy (depending on whether you grok their little regex language - I like it fine). I definitely recommend taking a look at "junkfilter" package of recipes for it even if you are planning to roll your own. The best part is that it is not just one big recipe - it's a bunch of them, so you can choose which parts to apply.

Do make sure you have at least version 3.21 of procmail though. It's actually gotten some improvement this month.

Folks who hate this stuff can try Sendmail's milters, Exim's filtering language, or possibly, do it all at the mail clients after the mail has been delivered to people.

Whether your filters are mail-client, local-delivery, or MTA based, making them sanity check that things are coming really to you, and from addresses that really exist, can have a dramatic improvement. The cost is processing power and often, a certain amount of network bandwidth, but if you're really getting hammered, it's probably worth it. Besides if my 386 can deal with just plain mail your PentiumIII-700 can actually do some work for a living and probably not even notice, until your ethernet card starts complaining. More on that 386 in a bit...

I've got a client who just switched from University of Washington's IMAP daemon over to Courier. The Courier MTA is just terrible (we tried, but ended up thoroughly debugging a sendmail setup instead, and the system is MUCH happier). But the IMAP daemon itself is so much better it's hard to believe. He's convinced that it is more than the switch to maildirs that makes it so incredibly fast. He does get an awful lot of mail, so I suspect Maildirs is what made the difference noticeable. We may never know for sure.

The world of DNS is getting more complicated every month, and slower. This has been clearly brought to light for me by two things - my client at last taking over his own destiny rather than hosting through an ISP, and my own mail server here at Starshine.

It used to be that there was only one choice for DNS, so ubiquitous it's called "the internet name daemon" - BIND, of course. And I'm very pleased to see that its new design seems to be holding up. Still it has the entire kitchen sink in it, and that makes it very complicated for small sites, even though there are a multitude of programs out there to help the weary sysadmin.

A bunch of folks - including some among the Gang - really enjoy djbdns, but you have to buy into DJ Bernstein's philosophy about some things in order to be comfortable with it. Its default policies are also a bit heavy handed about reaching for the root servers, which are, of course, overloaded. Still it's very popular and you can bet the mailing list folks will help you with it.

However, his stuff (especially his idea of configuration files and "plain english" in his docs) gives me indigestion, so I kept looking. There are so many caching-only nameservers I can't count them all. It's a shame that freshmeat's DNS category doesn't have sub categories for dynamic-dns, authoritative, and caching only, because that sure would make it easier to find the right one for the job.

However, I did find this pleasant little gem called MaraDNS. It was designed first to be authoritative only, uses a custom string library, and is trying to be extra careful about the parts of the DNS spec it implements. It was also easy to set up; zone files are very readable. It looks like the latest dev version allows caching too... though whether that's a creeping-feature is a good question.

For years I've been pretty proud that we can run our little domain on a 386. (Ok, we are cheating, that's not the web server.) But I could just kick myself for forgetting to put a DNS cache on it directly. So the poor thing has been struggling with the evil internet's timeouts lately and bravely plugging on... occasionally sending me "sorry boss, I couldn't figure out where to send it" kind of notes. (No, it's not qmail. I'm translating to English from RFC822-ese.)

So I look at the resolv.conf chain. No local cache. What was I thinking? (or maybe: What? Was I thinking? Obviously not.)

I tried pdnsd, because I liked the idea of a permanent cache... much more like having squid between you and the web, than just having a little memory buffer for an hour or two.

However, the binary packages didn't work. I wasn't going to compile it locally at the 386. I'll get to reading its source maybe, but if anyone has successful experiences with it, I'd enjoy seeing your article in the Gazette someday soon. I don't think I've tried very hard yet, but I had hoped it would be easier.

Meanwhile I had no time left and Debian made it a snap to have bind in cache only mode. Resolutions during mail seem to be much happier now.

There are also more mailing list managers out there than plants in my garden. I've got a big project for a different client where the "GUI front end" is being dumbed down for the real end users, and I get to cook up a curses front end in front of the real features, for the staff to use. It's very customized to their environment. I do hope they like it.

If you're working on a mailing list project, I beg, I plead, try and have something in between the traditional thrashing through pools of text files, and the gosh-nobody-wants-security-these-days web based administration. That way I can take less time to make the big bucks, and folks are a little bit happier with Linux.

However, if you have in mind to do anything of the sort on your own, and you prefer to work with shell scripts, I recommend Dialog. Make sure you get a recent version though. There are a gazillion minor revisions and brain damaged variants like whiptail. Debian seemed to have the newest and most complete amongst the distros I have lying around, so I ended up grafting its version into another distro. But, I finally tripped across a website for it that appears to be up to date. Use the "home" link to read of its muddied past.

Lastly, Debian potato for Sparc isn't nearly as hard as I thought it was going to be, but configuring all those pesky services on a completely fresh box, that's the same pain every time. It wouldn't be, if every client had the same network plans, but - you know it - they don't!

I also had no ready Sparc disc 1, but a pressing need to get it, and my link is not exactly the world's speediest.

Debian's pseudo image kit is a very strange and cool thing. It's a bit clunky to get going - you need to fetch some text files to get it started, and tell it what files are actually in the disc you're going to put together. But, once you've fed it that, it creates this "dummy" image which has its own padding where the directory structures will go, amd the files go in between. If some of them don't make it, oh well. But you can get them from anywhere on the mirror system ... much closer to home, usually, Leave the darn thing growing a pseudo image overnight, then come back the next day and run rsync against an archive site that allows rsync access to its official Debian CDs. Instead of a nail-biting 650 MB download, 3 to 20 MB or so of bitflips and file changes If you either can't handle 650 MB at a time anyway, or like the idea of the heavy hit on your bandwidth allocation just being that last clump of changes, it's a very good thing.

All it needs now is to be even smarter, and programmatically be able to fetch newer copies of the packages, then compose a real directory structure that correctly describes the files. If someone could do that, you'd only have to loopback mount the pseudoCD and re-generate Packages files, to have a current- instead of an Official disc, including all those security fixes we need to chase down otherwise. Making it bootable might be more tricky, but I'd even take a non-bootable one so I can give clients a mini-mirror site just by handing them a CD.

So, I hope some of you find this useful. I'm sure I'll see a number of you, and possibly some other members of the Answer Gang, at LinuxWorldExpo.

'Til next time -- Heather Stern, The Answer Gang's Editor Gal

More 2¢ Tips!

More 2¢ Tips!

bin/cue - iso files

bin/cue - iso filesQuestion From: "M.Mahtaney" <mahtaney from pobox.com>

hi james

i came across your website while looking for some help on file compression/conversion

i downloaded an archive in winrar format - which then decrompressed to bin/cue files

i'm not sure what to do with that now - i've converted it to an iso file, but i need to basically open it up to gain access to the application that was downloaded.

i'm a little lost on how to do that - can you help?

thanks, mgm.

Hi MGM,

Have you tried to mount the iso image and copy the files out? There maybe another way but this worked for me.

mount -o loop -t is09660 file.iso /mnt

ls /mnt

should then give a list of the files in the iso image.

Hope this helps.

Kind regards Andrew Higgs

Segmentation Faults -- At odd times.

Segmentation Faults -- At odd times.Question From: "Jon Coulter" <jon from alverno.org>

I've recently upgraded the ram on my linux box in an attempt to wean some of the memory problems that have been occurring

What were the previous problems. Just running out of memory? Or other things.

, and now get "Segmentation Fault"'s on almost every program about 80% of the time (if I keep trying and keep trying to run the program, it eventually doesn't Segmentation Fault for one time... sometimes then segmentation faulting later in its execution). My question is: Is there anything I can do about this?!? But seriously, is there any chance that a kernel upgrade would help fix this (my current kernel is 2.4.2)? I just don't understand why a memory upgrade would cause problems like this. I've run memtest86 test on the ram to ensure that it wasn't corrupted at all.

Frequent segfaults at random times usually indicate a hardware problem, as you suspected. A buggy kernel or libraries can throw segfaults left and right too, but if you didn't install any software at the time the faults started occurring, that rules that out.

Does /etc/lilo.conf have a "MEM=128M" number or something like that in it? Some BIOSes require this attribute to be included, other's don't. But if it's specified, it must be correct. Especially, it must not be larger than the amount of RAM you have: otherwise it will segfault all over the place and you'll be lucky to even get a login prompt. Remember to run lilo after changing lilo.conf.

Try taking out the memory and reseating it. Also check the disks: are the cables all tight and the partitions in order? Since memory gets swapped to disk if swap is enabled, it's possible for disk problems to masquerade as memory problems.

If you put back your old memory, does the problem go away? Or if you're mixing old and new memory, what happens if you remove the old memory? Are the two types of memory the same speed? Hopefully the faster memory will cycle down to the slower memory, but maybe the slower memory isn't catching up. What happens if you move each memory block to a different socket?

I'm running 2.4.0. There are always certain evil kernels that must be avoided, but I don't remember any such warnings for the 2.4 kernels. Whenever I upgrade, I ask around about the latest two stable kernels, and use whichever one people say they've had better experiences with.

Good luck. Random segfaults can be a very irritating thing.

More From: Ben Okopnik (The Answer Gang)

Take a look at the Sig 11 FAQ: <http://www.bitwizard.nl/sig11/>;.

2c tip : editing shell scripts

2c tip : editing shell scriptsFor shell scripts it's a common error to accidentally include a space after the line-continuation character. This is interpreted as a literal space with no continuation; probably not what you want. Since spaces are invisible, it can also be a hard error to spot. If you use emacs, you can flag such shell script errors using fontification. Add this code to your ~/.emacs file:

;;; This is a neat trick that makes bad shell script continuation

;;; marks, e.g. \ with trailing spaces, glow bright red:

(if (eq window-system 'x)

(progn

(set-face-background 'font-lock-warning-face "red")

(set-face-foreground 'font-lock-warning-face "white")

(font-lock-add-keywords 'sh-mode

'(("\\\\[ \t]+$" . font-lock-warning-face)))

)

)

Matt Willis

More From: Dan Wilder (The Answer Gang)

And in vi,

:set list

reveals all trailing spaces, as well as any other normally non-printing characters, such as those troublesome leading tabs required in some lines of makefiles, and any mixture of tab-indented lines with space-indented lines you might have introduced into Python scripts.

:set nolist

nullifies the "list" setting.

More From: Ben Okopnik (The Answer Gang)

Good tip, Matt! I'll add a bit to that: if you're using "vi", enter

:set nu list

in command mode to number all lines (useful when errors are reported), show all tabs as "^I", and end-of-lines as "$". Extra spaces become obvious.

In editors such as "mcedit", where selected lines are highlighted, start at the top of the document, begin the selection, and arrow (or page) down. The highlight will show any extra spaces at line-ends.

2.2.20 kernel?

2.2.20 kernel?Question From: Ben Okopnik

Where can I get one? I've looked at kernel.org, and they only go up to .19...

Ben

Glad you asked, Ben, now I have an excuse to cough up a 2c Tip.

Cool. Thanks!

The two places to look for "the rest of it" if you really want a bleeding edge kernel are:

Nope; just 2.4.x stuff.

Alan keeps subdirectories in there which should help you figure things out. In the moment I type this, his 2.4.6 patch is at ac5 and his 2.2.20pre is at 7.

As of press time, 2.4.7 patch is at ac3 and 2.2.20pre is at 8. -- Heather

These are of course patches that you apply to the standard source after you unpack the tarball.

For a last tidbit, the web page at http://www.kernel.org keeps track of the current Linus tree, listing both the released version number and the latest file in the /testing area.

Linux on Sun Sparc??

Linux on Sun Sparc??Question From: Danie Robberts <DanieR from PQAfrica.co.za>

Is there a way to install this. I can see the Sparc64 architecture under /usr/src/linux/arch

Booting from the slackware 8 cd does not work, and it seems as if Slackware's Web site is "unavailable"

Please, any pointers!

Cheers Danie

Sure.

The Linux Weekly News just wrote that Slackware is no longer supporting their Sparc distro. There's a sourceforge project to take over the code: http://sourceforge.net/projects/splack

Entirely possible that some decent community interest would boost it nicely, but if so, why'd Slackware have to let go of it... dunno...

Maybe you want to try another distro that builds for Sparc - RH's sparc build isn't well regarded amongst a few non-Intel types I know locally... I think in part because they get to Sparcs last. But you've lots of choices anyway. The search engines (keywords: sparc distribution) reveal that Mandrake and SuSE both have Sparc distros, Rock Linux (a distro that builds from sources - http://www.rocklinux.org) builds successfully on it, and of course there's my personal favorite, Debian.

The Sparc-HOWTO adds Caldera OpenLinux and TurboLinux to the mix. I know that Caldera is really pushing to support the enterprise but that points to openlinux.org and appears to be an unhappy weblink - I only did a spot check, but didn't see "Sparc" on Caldera's own pages. One may safely presume that someone who wants Caldera's style of enterprise level support for Linux will be getting Sun maintenance contracts for their high end Sparcs, right?

Anyways the reasons that Debian is my personal favorite in this regard are:

But you don't have to take my word for it

![]() There's a bunch of folks at

http://www.ultralinux.org

There's a bunch of folks at

http://www.ultralinux.org

who pay attention to how well things work on ultrasparcs, and they've helpfully gathered a bunch of pointers into the arcvhives and subscribe methods for the lists maintained by the major vendors in this regard. So check out what people who really use Sparcs have been saying about 'em! Using your model number as a search key may narrow things down, too.

Routing Mail

Routing MailQuestion From: Danie Robberts (DanieR from PQAfrica.co.za)

Hi,

I am trying to set my environment up so that I can use Star Office on my Slackware 8 Laptop, and send mail via our Exchange Servers. The problem is that the IIS Department has only allowed my Suna Workstation to Send mail to their List server. I think it is limited by IP @.

Is there a way to configure a type of mail routing agent, so that my sending adress in S/O will be the IP@ of my Sun Workstation, and the receiving will be the exchange server (At the moment I can only receive e-mail)

Here is the IP Setup:

List Server: 196.10.24.112 Exchange 196.10.24.33 Laptop: 192.168.102.241 Sun: 192.168.102.44

Thanx

Danie

Not directly, while your Sun workstation is online. You'd have to give your Linux workstation the Sun's IP number. Two hosts with the same IP on the same network is a recipe for trouble!

If you've full control of your Sun, you could arrange to use it as your email relay host, for an indirect solution.

It looks like StarOffice can be configured to use two different mail servers, one for outgoing, one for incoming. Set the outgoing server to the Sun, and the incoming to the exchange server. Configure the MTA on the Sun (sendmail, I expect) to relay for you, and you're all set.

To test the Sun,

telnet sun 25 helo linux.hostname mail from: <you@your.domain> rcpt to: <somebody@reasonable.domain> data some data . quit

"linux.hostname" is the hostname of your linux system "you@your.domain" is your return email address "somebody@reasonable.domain" is some reasonable recipient

If you don't get a refusal, and the email goes through, the Sun is set up to relay.

Command to read CMOS from running Linux system

Command to read CMOS from running Linux systemQuestion From: Paul Kellogg (pkellogg from avaya.com)

To "The Answer Gang":

I am looking for a program I can run on my Linux machine that will

display the CMOS settings and CPU information. I would like to avoid

rebooting to display the information. Have you heard of a program that will

work for this? And if so, do you know where I can get it? If not, do you

have some suggestions for where to start if I wanted to write such a program?

I am working with a RedHat 6.2 system on a intel platform.

Thanks - Paul.

Hi Paul,

Try `cat /proc/cpuinfo` for information regarding the CPU. I am not to sure about the CMOS info. It depends what you are looking for?

Look at the files in /proc and see if you can find what you are looking for.

I hope this will help in some small way.

Kind regards

linux ftp problem

linux ftp problemQuestion From: Brad Webster (webster_brad from hotmail.com)

ok heres the deal, im having a heck of a time with the ftp client on my linux server. im running red hat 6 and i can connect fine, but then it will not accept the username and password for any of the users. the worst part is the same username and passwords will connect through telnet? any suggestions would be very appriciated

bard webster

Hello,

First off, I shall begin by saying, please in future send your e-mail in

plain-text format and NOT in HTML. Poor Heather will have a hell of a time

trying to extract the important information from the e-mail

![]()

It's easier than quoted printable, but, yeah, it's a pain. -- Heather

So, to your problem. There are a number of things that you can check. Firstly, when using FTP, do you make use of the hidden files ".netrc", in each of your users home directory? In that file, you can store the ftp machine, and the username and password of the user. Typically, it would look like the following:

machine ftp.server.sch.uk login usernametom password xxxxxxx

The reason why your users can log in via telnet, is that telnet uses different protocols. I would also suggest using a program such as "ssh", which is more secure than telnet, but I digress.

Regards,

Thomas Adam

Self extracting shell script

Self extracting shell scriptQuestion From: Albert J. Evans (evans.albert from mayo.edu)

Hi,

I'm looking through some older Linux Gazzette articles and noticed "fuzzybear" had put out a location to download his "self extracting w/make" shell script. He was responding to your inquiry about this script. The URL he listed is now defunct. Did you by chance get a copy of it, or know his current URL?

You know, I searched the past issues of LG... and I must admit that I couldn't find my own article mentioning that. I'm pretty sure that the URL I gave is the same as it is now - I don't rememberr changing it at all - and I've just tested it (with Lynx.) It's still good. Anyway, here it is -

http://www.geocities.com/ben-fuzzybear/sfx-0.9.4.tgz

The reason I haven't been particularly hyped about this thing is that,

the more time passes, the less I think of it as a good idea. Given that

one of the classes I teach for a living is Unix (Solaris) security, it's

actually my job to believe that it's a bad idea.

![]() Just like many other

things, it's perfectly fine as long as you trust the source of the data

- but things rapidly skid downhill as soon as that comes into question.

Sure, in the Wind*ws world, people blithely exchange "Setup.exe" files;

they also suffer from innumerable viruses, etc.

Just like many other

things, it's perfectly fine as long as you trust the source of the data

- but things rapidly skid downhill as soon as that comes into question.

Sure, in the Wind*ws world, people blithely exchange "Setup.exe" files;

they also suffer from innumerable viruses, etc.

Think well before you use this tool. Obviously - and if it isn't obvious, even downloading it might be a bad idea - never-ever-*ever* run a self-extracting archive, or any executable that is not 100% trustworthy, as root.

...at this point Ben drew a huge ASCII-art skull and crossbones...

You have been warned.

![]()

Ben Okopnik

Cannot Format Network Drive

Cannot Format Network DriveI'm not quite sure if you can help me out with this situation. Okay here's the lay down I have 1 IDE Hardrive 35 gigs About a month ago I added the program System Comander so I could put Windows 98, Windows 2000, and Linux on my system. I did it successfully! So now about a week ago I wanted everything off, cause I bought a serperate PC for Linux. This is how I wipe everything off of my drive. I used a Debug to wipe everything off and used fdisk to try to get rid off all of the partititions... but it still didn't get all of the partitions off (looking in fdisk). How I got rid of all of them was doing the commands

lock c: fdisk /mbr

Than going back to fdisk and deleted the remaining partitions. This did work... no longer is there any drives stated in fdisk. So than I created 2 drive c: and d: both about 15 gigs or more But now this is were I run into my problem.... After rebooting I tried to format those drives a it's giving the error message CANNOT FORMAT A NETWORK DRIVE???? I know this is a lot more information than you prbably needed, but I thought I'd better say everything so that you know the whole story... Can you help me? Do you know of anything I could try?

Well, since you've removed Linux, your options are pretty thin. It sounds like your 'fdisk' screwed up somewhere, or you have a virus (I remember, a looong time ago, of some that did that.) One of the easiest ways to handle it would be to download Tom's rootboot <http://www.toms.net/rb/>;, run it, and use the Linux fdisk that comes with it (you should read the 'fdisk' man page unless you're very familiar with it.) It can rewrite your partitions and assign the correct types to them; after that, you should be able to boot to DOS and do whatever you need.

Ben Okopnik

Wu-FTP and Linux Newbie

Wu-FTP and Linux NewbieQuestion From: seboulva (seboulva from gmx.de)

Sorry about this Simple Question, but I am a Linux Newbie and I want, no I must Update our Wu- FTP Server.It Comes with Red Hat 6.3

I Downloaded the 3 *.patch Files . And want to Apply it with the Patch Command.

Do you have the source code for wu-ftp and did you compile it yourself last time? patch files are usually for source code, and they will change a specific version to a specific other version. Are you sure that the patch files math your source code version? If it's RedHats patch files for their 6.3 distribution that should match. But most probably it matches only the source code from their 6.3 CD's.

If all this matches, you should be able to compile the basic wu-tfp as it comes with 6.3. Then you can try to patch the source and recompile.

Depending on the patch files (especially with which path they are generated) you would do something like:

cd wu-ftp-source patch -p 1 < ./path/to/patchfile

the -p x optioon strips off x directory levels from the path in the patch file. If you look in the ascii patch-files you will see file names with path -- they have to match where your source is.

'patch --help' says among other very usful thing:

--dry-run Do not actually change any files; just print what would happen.

so this is the option for testing until it looks as if it will apply cleanly. Then remove the dry-run and do it for real.

recompile wu-ftp and then install it in the system. You will have to restart it of course.

K.-H.

Root Password

Root PasswordQuestion From: Adam Wilhite (a.m.wilhite from larc.nasa.gov)

Somehow the root password was lost or doesn't work anymore for one of the computers I administer. I went through your steps to reboot with init=/bin/sh... My problem is when I try to mount /usr. It says it can't find /usr. I would really appreciate your help.

thanks,

adam wilhite

Hi,

Your error message about not being able to find /usr, suggests to me that "/usr" is not your mount point. Take a look in the file "/etc/fstab", which is the filesystem table.

Locate the entry which points to "/usr". Your mount points are usually in the second field, i.e. after all the "/dev/hda1" entries.

When you have found it, type in the correct value, using:

mount /path/to/mountpoint

Failing that, try issuing the command:

mount -a

that will tell your computer, to (try and) mount all the entries within the file "/etc/fstab". You can then check what has been mounted, by typing in the following:

mount

HTH,

Thomas Adam

RH7.1 switch to KDE login as default

RH7.1 switch to KDE login as defaultQuestions From: Larry Sanders (lsanders from hsa-env.com) and Jim (anonymous)

Having completed the installation of Red Hat 7.1 with both GNOME and KDE, the default graphical login is GNOME. How is this changed to the KDE default login for the system? -- Larry

I love and use both the KDE and Gnome desktops. I have a strong aesthetic preference, though, for the KDE login manager.

My RedHat 7.1 install has resisted all efforts to switch it from its default Gnome login manager to the KDE one. This isn't a big deal but where is this managed and how do I make the change? -- Best Regards, Jim

Try running the program "switchdesk". It might be on the GNOME menu, but you can run it from a command-line quicker. It will let you switch default desktops and even different desktops for different displays (although that tends to be a bit problematic).

--

Regards,

Faber Fedor

3d linux

3d linuxHello!

This week I found some interesting programs that can help (a little bit) to Philippe CABAL.

It is frontend(Opensource, GPL) to render engines that are available to linux and win:

BTW: K-3D is one of the best applications I have seen on linux. Also no other application need so much time and memory resources for compiling like K-3D

http://www.dotcsw.com have perfect page with links. There is lof of rendering staff (also many that are just expected)

I hope this help.

Zdeno

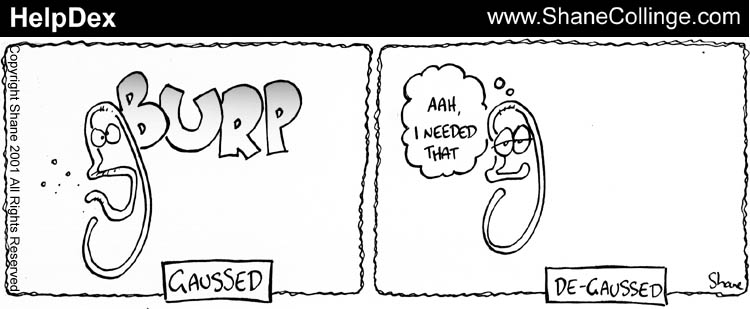

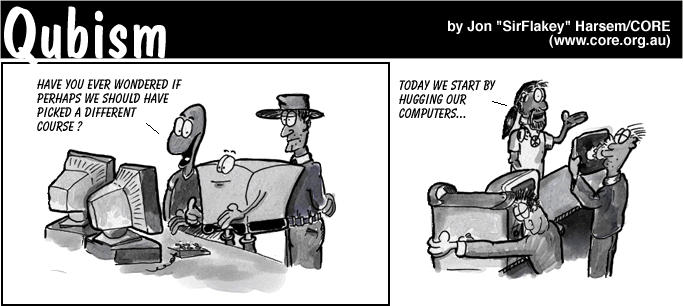

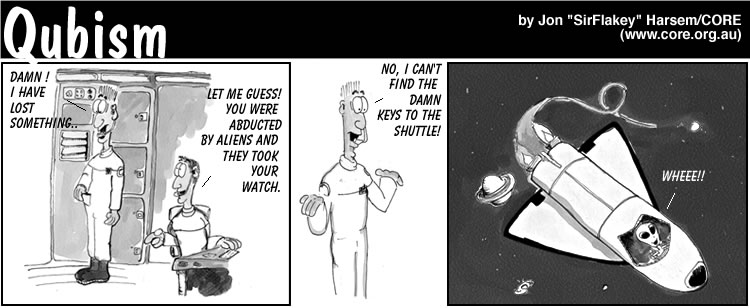

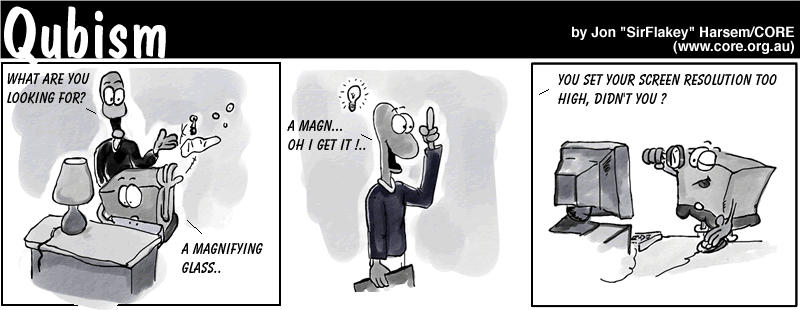

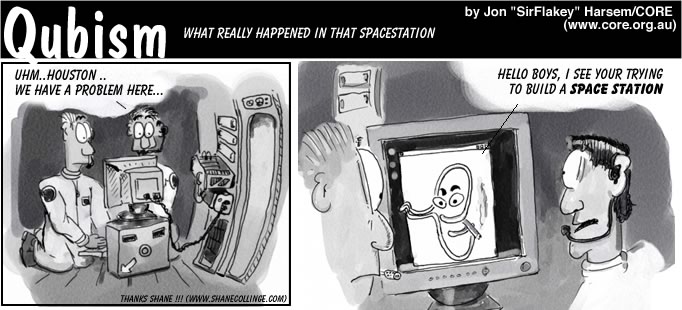

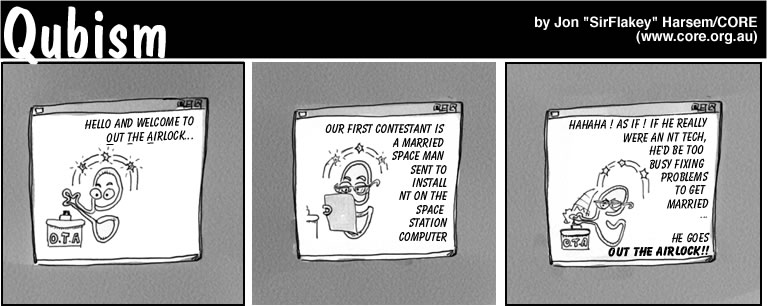

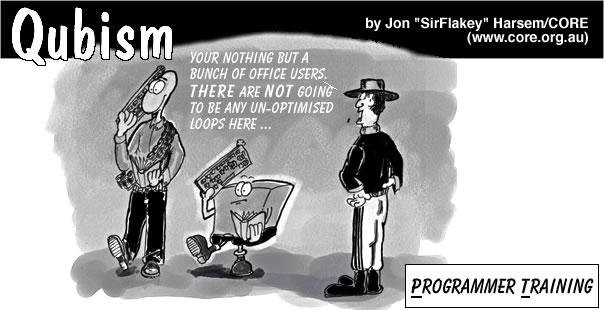

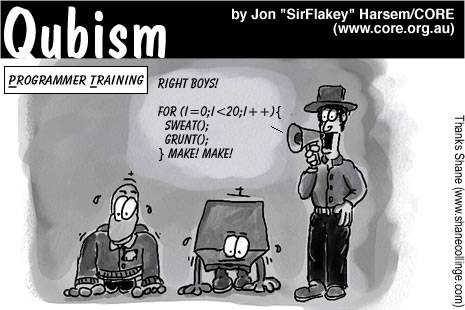

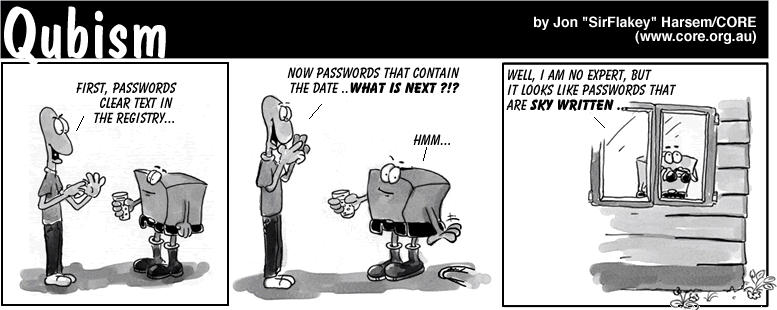

Here's a peek at Shane's sketchbook:

More HelpDex cartoons are at

http://www.shanecollinge.com/Linux. This month, Shane has also been busy doing some

illustrations

(non-Linux).

When you boot Linux, do you get a "login:" prompt on a bunch of

virtual consoles and have to type in your username and password on

each of them? Even though you're the only one who uses the system?

Well, stop it. You can make these consoles come up all logged on and

at a command prompt at every boot.

In case you're thinking that password prompt is necessary for

security, think again. Chances are that if someone has access to your

console keyboard, he also has access to your floppy disk drive and

could easily insert his own system disk in there and be logged in as

you in three minutes anyway. That password prompt is about as useful

as an umbrella for fish.

The method I'm going to describe for getting your virtual consoles

logged in automatically consists of installing some software and

changing a few lines in /etc/inittab. Before I do

that, I'll take you on a mind-expanding journey through the land of

getties and logins to see just how a Unix user gets logged in.

First, I must clarify that I'm talking about virtual consoles --

those are text consoles that you ordinarily switch between by pressing

ALT-F2 or CTL-ALT-F2 and such. Shells that you see in windows on a

graphical desktop are something else entirely. You can make those

windows come up automatically at boot time too, but the process is

quite a bit different and not covered by this article.

Also, consider serial terminals: The same technique discussed in

this article for virtual consoles works for serial terminals, but may

need some tweaking because the terminal may need some things such as

baud rate and parity set.

In the traditional Unix system of old, the computer was in a

locked room and users accessed the system via terminals in another

room. The terminals were connected to serial ports. When the system

first came up, it printed (we're back before CRT terminals -- they

really did print) some identification information and then a "login:"

prompt. Whoever wanted to use the computer would walk up to one of

these terminals and type in his username, then his password, and then

he would get a shell prompt and be "logged in."

Today, you see the same thing on Linux virtual consoles, though it

doesn't make as much sense if you don't think about the history.

Let's go through the Linux boot process now and see how that login

prompt gets up there.

When you first boot Linux, the kernel creates the

init process. It is the first and last process to

exist in any Linux system. All other processes in a Linux system are

created either by init or by a descendant of

init.

The init process normally runs a program called

Sysvinit or something like it. It's worth pointing out that you can

really run any program you like as init, naming the

executable in Linux boot parameters. But the default is the

executable /sbin/init, which is usually Sysvinit.

Sysvinit takes its instructions from the file

/etc/inittab.

To see how init works, do a man init and

man inittab.

If you look in /etc/inittab, you will see the

instructions that tell it to start a bunch of processes running a

getty program, one process for each virtual console. Here is an

example of a line from /etc/inittab that tells

init to start a process running a getty on the

virtual console /dev/tty5:

In this case, the particular getty program is

/sbin/agetty. On your system, you may be using

/sbin/mingetty or any of a bunch of other programs.

(Whatever the program, it's a good bet it has "getty" in its name. We

call these getties because the very first one was simply called

"getty," derived from "get teletype".)

Getty opens the specified terminal as the standard input, standard

output, and standard error files for its process. It also assigns

that terminal as the process' "controlling terminal" and sets the

ownership and permissions on the terminal device to something safe

(resetting whatever may have been set by the user of a previous login

session).

So now you can see how the login prompt gets up on virtual console